11. Two-layer Neural Network

Multilayer Neural Networks

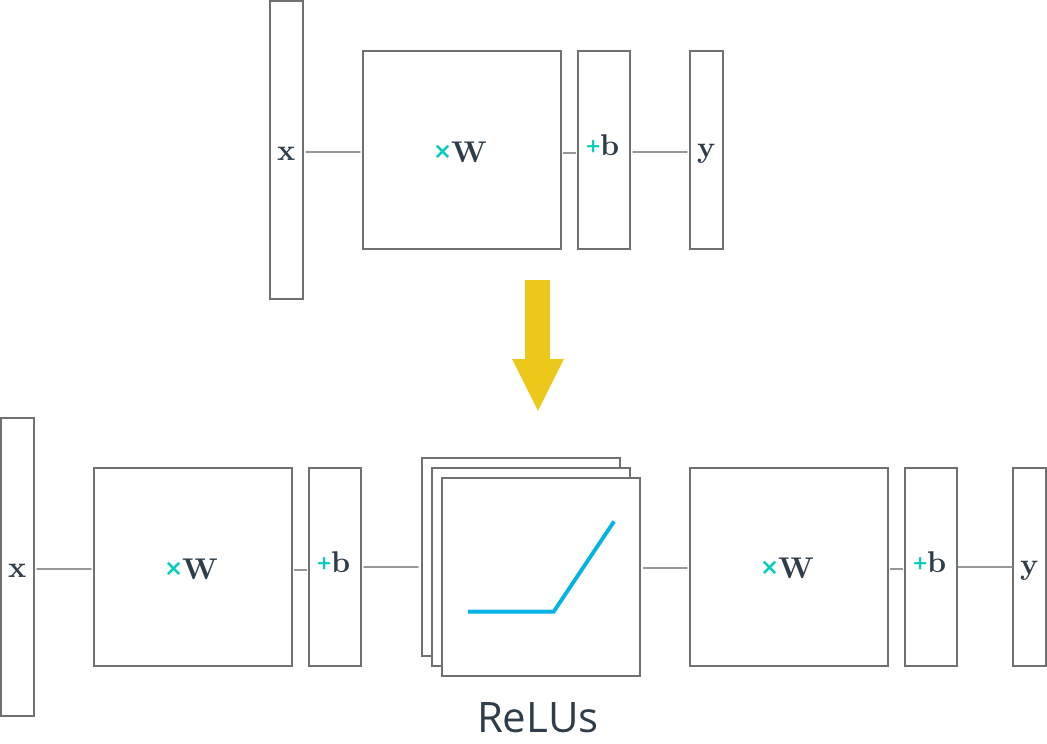

In the previous lessons and the lab, you learned how to build a neural network of one layer. Now, you'll learn how to build multilayer neural networks with TensorFlow. Adding a hidden layer to a network allows it to model more complex functions. Also, using a non-linear activation function on the hidden layer lets it model non-linear functions.

The first thing we'll learn to implement in TensorFlow is ReLU hidden layer. A ReLU is a non-linear function, or rectified linear unit. The ReLU function is 0 for negative inputs and x for all inputs x >0.

As before, the following nodes will build up on the knowledge from the Deep Neural Networks lesson. If you need to refresh your mind, you can go back and watch them again.